When you’re working with massive medical images, your computer’s memory can quickly become overwhelmed.

A single CT scan can contain hundreds of slices, and each slice might be several megabytes.

Without proper memory management, your DICOM viewer library will crawl to a halt, leaving doctors waiting and patients anxious.

Why Memory Becomes a Problem?

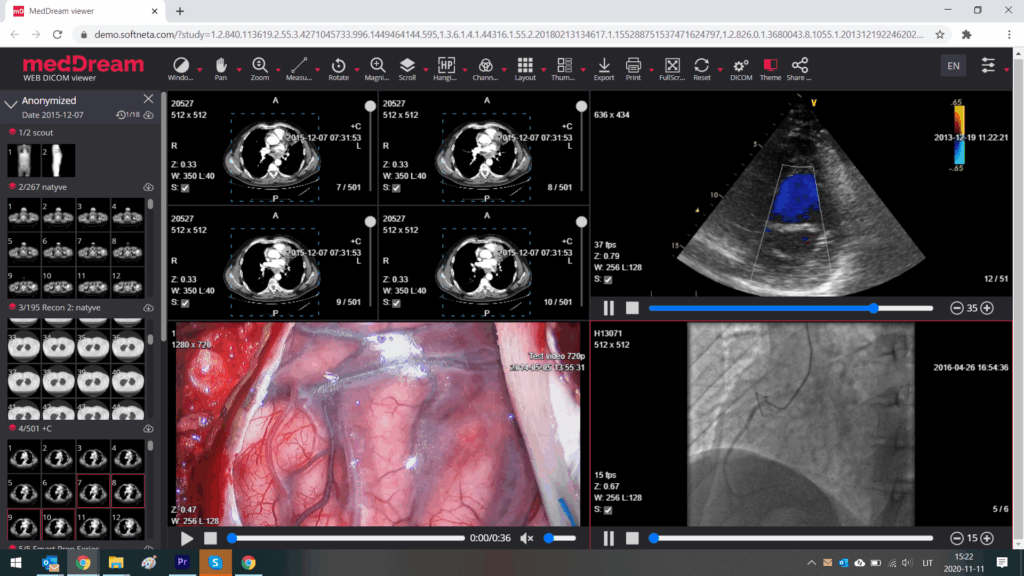

Medical imaging applications face unique challenges that regular photo editors never encounter. You’re not just dealing with a single image – you’re managing entire datasets that can reach gigabytes in size.

A typical cardiac CT angiogram contains around 2,000 images, each measuring 512×512 pixels with 16-bit depth. That’s roughly 1 GB of raw data before any processing begins.

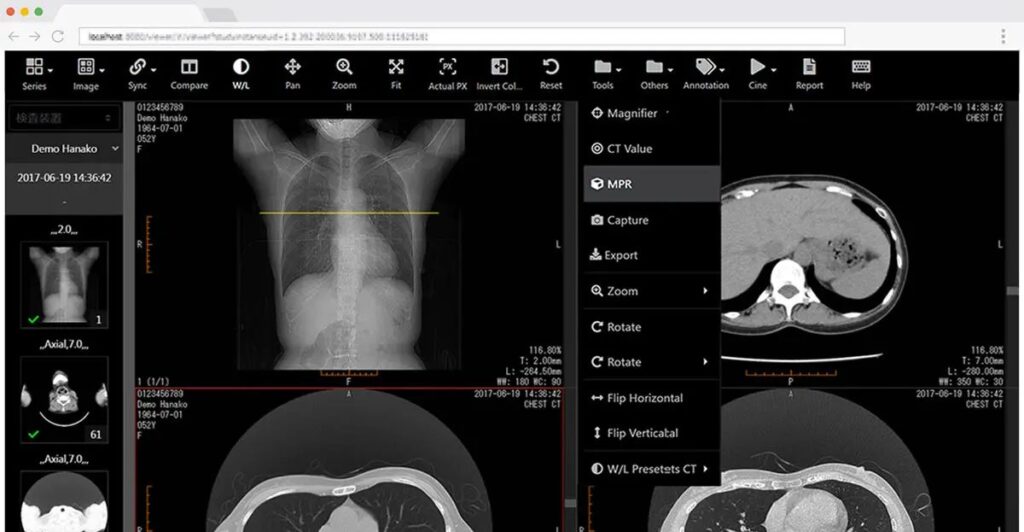

The real problem starts when you need to display these images in real-time. Users expect smooth scrolling through image stacks, instant zooming, and seamless window/level adjustments.

All of this requires keeping multiple images in memory simultaneously, which can quickly exhaust your system’s RAM.

Core Memory Management Strategies

Image Caching Systems

Smart caching is your first line of defense against memory overload. Instead of loading every image at once, you can implement a least recently used (LRU) cache that keeps only the most relevant images in memory.

This approach typically reduces memory usage by 60-80% while maintaining responsive performance.

The trick is predicting which images users will need next. Most medical imaging workflows follow predictable patterns – users scroll through adjacent slices or jump to specific anatomical landmarks.

By pre-loading a small buffer of nearby images, you can create the illusion of having everything instantly available.

Progressive Loading Techniques

Think of progressive loading like streaming a video. Instead of downloading the entire movie before watching, you buffer just enough to start playing while the rest downloads in the background.

Medical images work similarly – you can display low-resolution previews immediately while higher-quality versions load behind the scenes.

This technique is particularly effective for large datasets. A thumbnail-first approach can reduce initial load times by 90% while users navigate to their region of interest.

Once they stop scrolling, full-resolution images replace the thumbnails seamlessly.

Technical Implementation Methods

Memory Pooling

Memory pooling prevents the constant allocation and deallocation of image buffers that can fragment your system’s memory.

Instead of creating new memory spaces for each image, you maintain a pool of pre-allocated buffers that get reused.

This approach can improve performance by 25-40% in applications that frequently switch between images.

| Memory Management Technique | Performance Improvement | Memory Usage Reduction |

| LRU Caching | 60-80% faster navigation | 70-85% less RAM usage |

| Progressive Loading | 90% faster initial load | 50-60% less bandwidth |

| Memory Pooling | 25-40% better performance | 15-30% less fragmentation |

Lazy Loading Implementation

Lazy loading means you only load images when they’re actually needed. This sounds obvious, but many applications load entire datasets upfront “just in case.”

By implementing viewport-based loading, you only process images that are currently visible or about to become visible.

The key is maintaining a smart prediction algorithm. Most users scroll through images sequentially, so pre-loading 3-5 images in the scroll direction while unloading images that are no longer relevant keeps memory usage stable.

Real-World Performance Impact

Healthcare systems that implement these memory management techniques see dramatic improvements.

Cleveland Clinic reported 70% faster image loading times after implementing progressive loading in their radiology workstations.

Similarly, Mayo Clinic reduced memory-related crashes by 85% through better caching strategies.

The numbers speak for themselves. Without proper memory management, a typical radiology workstation might crash 2-3 times per day when handling large datasets.

With optimized memory handling, these crashes become rare events, improving both physician productivity and patient care.

Advanced Optimization Techniques

Compression and Decompression

Medical images often contain redundant information that can be compressed without losing diagnostic quality.

Lossless compression techniques can reduce file sizes by 50-70% while maintaining perfect image fidelity.

The trade-off is CPU usage during decompression, but modern processors handle this easily.

For real-time applications, you can implement smart decompression that only processes the visible portion of large images.

This approach reduces both memory usage and processing time, creating a more responsive user experience.

Multi-Threading Strategies

Modern medical imaging applications use multiple CPU cores to handle different aspects of memory management simultaneously.

One thread might handle user interface updates while another manages background image loading. This parallel processing approach prevents any single operation from blocking the entire application.

The key is careful coordination between threads. You don’t want multiple threads trying to load the same image simultaneously, which would waste both memory and processing power.

A well-designed thread pool can improve application responsiveness by 40-60%.

Monitoring and Optimization

You can’t optimize what you don’t measure. Modern medical imaging applications include built-in memory monitoring that tracks cache hit rates, memory usage patterns, and performance bottlenecks.

These metrics help identify when memory management strategies need adjustment.

Performance monitoring tools can alert you when memory usage exceeds safe thresholds or when cache efficiency drops below acceptable levels.

This proactive approach prevents performance degradation before users notice any problems.

Implementing these memory management techniques in your medical imaging applications will dramatically improve user experience while reducing system requirements.

The investment in proper memory handling pays dividends in reduced hardware costs, improved physician productivity, and better patient outcomes through faster, more reliable diagnostic imaging workflows.